Resizable Bar has been a feature on AMD and Nvidia graphics cards, along with Intel and AMD CPUs, for a few years. You’ve probably heard of it if you keep up with the world of PC gaming. Since its introduction, ReBar has become one of the essential features to enable on any gaming PC, right alongside memory overclocking profiles like Intel XMP. It’s so essential that most modern motherboards come with the feature already enabled so you don’t have to think about it.

You should leave the feature turned on for general use, even if it doesn’t provide a performance boost in every game. The further you dig into ReBar and its support, though, the more complicated it gets. Even if you can turn the feature on and forget about it, there are a lot of idiosyncrasies with ReBar that you should know about if you’re looking to get peak performance out of your PC.

What is ReBar?

The name Resizable Bar doesn’t really tell you anything about what the feature does. It is a feature of PCI Express, where you slot in your graphics card, and it allows the

Without it, your CPU is only able to access 256MB chunks of your video memory at once, and that’s a bit of a problem. Modern

ReBar is the official name, but you’ll also see AMD’s Smart Access Memory (SAM). It’s the same thing as ReBar, just branded for if you use an AMD GPU and AMD CPU. In theory, SAM and ReBar should be identical, but as I’ll get into shortly, that isn’t exactly the case.

So, it’s all upside for ReBar, right? It allows you to use all of your memory at once, which should lead to a performance boost. Indeed, ReBar does improve performance in some games, but your mileage with it will vary greatly depending on the hardware you have, the game you’re playing, and if you actually have ReBar enabled.

When it works

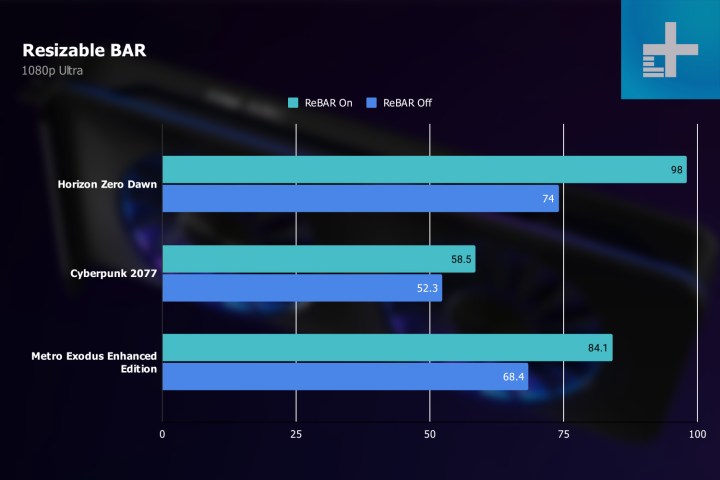

I don’t want to retread ground with ReBar here. If you haven’t seen the testing that was conducted a couple of years back when Nvidia enabled the feature, I’ll point you in the direction of Guru3D. In supported titles, you’ll generally see a performance increase in the low single digits. The boost is never huge, but it’s enough that you’ll want to leave ReBar turned on.

That holds true in modern games. Testing at 1080p with Nvidia’s RTX 4060 Ti and the AMD Ryzen 9 7950X, I saw a minor change in performance in both Resident Evil 4 and Hogwarts Legacy. This shouldn’t work, and I’ll get to why soon, but the results were repeatable.

There are a far greater number of games where you see no difference, though. You can see that at work in F1 2022, where turning on ReBar did nothing for performance, both with and without ray tracing on.

The problem with looking at benchmarks on just one system, though, is that ReBar is highly dependent on the hardware you’re running. A great example is Intel’s Arc A770/A750. If you turn ReBar off with one of these

For Intel and AMD systems, ReBar is a solid feature that sometimes provides a minor performance lift. With Nvidia, however, the situation is a lot more complicated.

The Nvidia caveat

As mentioned in the last section, we shouldn’t see a performance boost in

Further complicating this issue is that Nvidia hasn’t published a list of supported ReBar games since it introduced the feature in 2021. At the time, there were 19 games supported, and since then, that’s the only official list we’ve ever seen. That’s true even if you check that ReBar is turned on in the Nvidia Control Panel. It will show that the feature is on, even if it’s blocked by a Nvidia driver in most of your games.

There are more supported games than the ones Nvidia published in 2021, though, and users know that through the Nvidia Profile Inspector. This third-party tool basically breaks the chains on the Nvidia Control Panel, showing you all of the configuration options that normally aren’t available to users. Through this, users have discovered that Nvidia has added support for additional games over the past two years, including Forza Horizon 5 and the Dead Space Remake. You can verify this yourself by downloading the Nvidia Profile Inspector and checking the profiles for these games.

This tool also allows you to force ReBar on by enabling it for games that aren’t technically supported. I did that in both Hogwarts Legacy and

That may just look like variance — and in fairness, it could be — but forcing ReBar on in games that aren’t supported can have some devastating performance impacts. In The Last of Us Part One, for example, turning on ReBar in the BIOS did nothing for performance. It’s not a supported game, so ReBar isn’t actually working on the Nvidia GPU. However, when you force it on in the Nvidia Profile Inspector, there’s a massive drop in performance.

This is probably a hint as to why Nvidia doesn’t talk about ReBar much these days. It doesn’t seem to provide much upside even in supported games, and in some unsupported titles, there could be a massive performance loss.

One size doesn’t fit all

The wisdom of turning ReBar on by default stands, but knowing the context around that is important. For AMD and Intel, you can pretty safely leave ReBar on without any problems. For Nvidia, you should leave it turned on as well while recognizing that it’s not doing anything in the vast majority of your games. Nvidia’s idea behind turning on this feature originally was to test individual games and only enable those that show a positive impact, so you don’t have to worry about if you’re getting the best performance.

But that’s not all PC gamers. If you’re looking to find those minor performance improvements, there are games that benefit from forcing ReBar on through Nvidia Profile Inspector as showcased by Hogwarts Legacy. Be prepared for a lot of fiddling, though, as there are games like The Last of Us Part One that show a big performance loss.

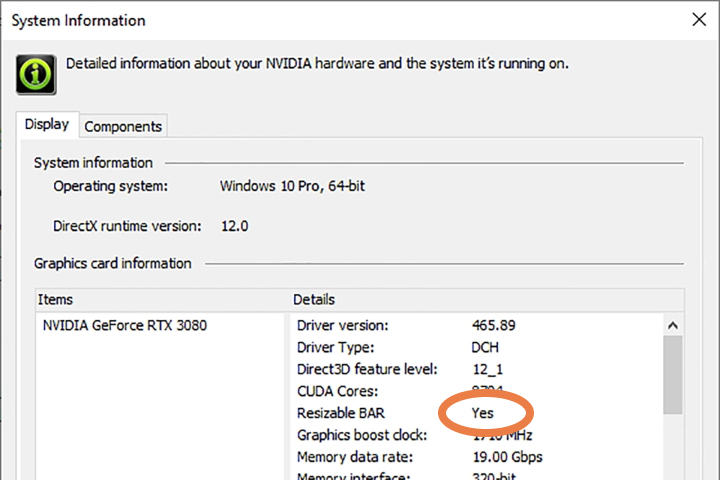

You should already have ReBar enabled on your PC, but if you don’t, make sure to check out our guide on how to enable Resizable Bar. You can quickly check if the feature is enabled through the Nvidia Control Panel (click System Information) or Radeon Software if you have an AMD GPU.

Editors' Recommendations

- I’m glad GTA 6 isn’t coming to PC right away

- When a high frame rate can lose you the game

- Don’t believe the hype — the era of native resolution gaming isn’t over

- I bought Nvidia’s worst-value GPU, but I don’t regret it

- Lenovo’s Legion Glasses promise big-screen gaming wherever you are